《鄉民大學問EP.37》字幕版|台南政治版圖有變數?謝龍介有機會?黃偉哲全說了!台南市長前哨戰開打!陳亭妃、王定宇、林俊憲鴨子划水 邱明玉揭他勝算高!黃智賢轉綠?被哥哥盜帳號?黃偉哲霸氣回應現場笑翻!

NOW影音

更多NOW影音焦點

更多焦點-

人物/新北議長蔣根煌議場開譙五字經 曾脫口同性戀是愛滋病溫床

新北市議會昨日下午舉行預備會議,不過國民黨內竟爆發衝突,國民黨籍議長蔣根煌不滿同黨議員陳明義在會議過程搶走籤筒,當場在主席台發飆,直接飆罵「X你娘XX」五字經,並朝陳丟出手機。蔣根煌深耕新莊20多年,

2024-04-24 08:00

-

台積電開盤上漲16元 台股早盤漲323點、挑戰站回2萬點關卡

美國股市昨(23)日全數走高,其中半導體股走強,費半指數強漲2.21%。激勵台北股市今(24)日全數走高,開盤大漲171.18點,來到19770.46點,隨後在權值股走高的激勵下,上漲323.83點,

2024-04-24 09:02

-

一粒姊姊甜蜜官宣男友宋嘉翔!現身樂天2軍球場 戀情早有跡可循

台鋼雄鷹啦啦隊成員「一粒」因「葉保弟應援曲」的魔性舞步爆紅,連帶仙氣十足的雙胞胎姊姊Joy也跟著爆紅。今(23)日,Joy也在IG上甜蜜公開球員男友,是樂天桃猿潛力「大物」捕手宋嘉翔。事實上,Joy早

2024-04-23 16:58

-

內湖休旅車撞上千萬保時捷!開車門查看又險刮大B 結局曝光

21日下午近2時,一輛保時捷從北市內湖區舊宗路一段某汽車精品店離開時,因未禮讓行進中車輛,加上視線死角,與後方直行休旅車撞上,造成保時捷左側車殼受損,所幸2駕駛皆未受傷。事後,有車主將這段行車紀錄器P

2024-04-24 09:09

精選專題

要聞

更多要聞-

巷仔內/心輔官比例低、業務重 顧立雄接國防部降低自傷案難度高

國軍從今年初開始爆發多人因故死亡案例,22日再傳出陸軍10軍團第五支部嘉義聯保廠修理工廠張姓副廠長休假期間,因故在營外自傷身亡。這是國軍今年至今第16起自傷案,國軍自傷案均居高不下,歸因於部隊心輔官人

2024-04-24 08:32

-

人物/新北議長蔣根煌議場開譙五字經 曾脫口同性戀是愛滋病溫床

新北市議會昨日下午舉行預備會議,不過國民黨內竟爆發衝突,國民黨籍議長蔣根煌不滿同黨議員陳明義在會議過程搶走籤筒,當場在主席台發飆,直接飆罵「X你娘XX」五字經,並朝陳丟出手機。蔣根煌深耕新莊20多年,

2024-04-24 08:00

-

新任NCC主委傳內定是「他」 郭正亮一看驚呼「有問題」並拋1主張

國家通訊傳播委員會(NCC)7月即將有4名委員任期屆滿,包含NCC主委陳耀祥在內。外傳內定由台灣經濟研究院研究四所長劉柏立接任主委。對此,前立委郭正亮直呼「有問題」,並主張應廢除NCC有線電視執照判決

2024-04-24 07:47

-

打破「重男輕女」印象!卓榮泰內閣已有8名女性閣員 超越陳建仁

內定520後將接任卓榮泰,陸續曝光未來的新任閣員,目前已確定的女性閣員,包括準副閣揆鄭麗君在內,已有8人,已超越現在的行政院長陳建仁內閣。現在部會首長中,扣除獨立機關外,現有女性閣員包括財政部長莊翠雲

2024-04-24 07:30

新奇

更多新奇-

《池水抽光好吃驚》來台灣!抽光中興湖 驚見「激似鯊魚」外來種

日本知名綜藝節目《池水抽光好吃驚》在今年3月份來台灣,由主持人田村淳與興大生組成多達1百人的「池水台日隊」,抽光國立中興大學內的「中興湖」池水,結果捕撈出近3百隻生物,裡面甚至還有「激似鯊魚」的外來種

2024-04-23 18:07

-

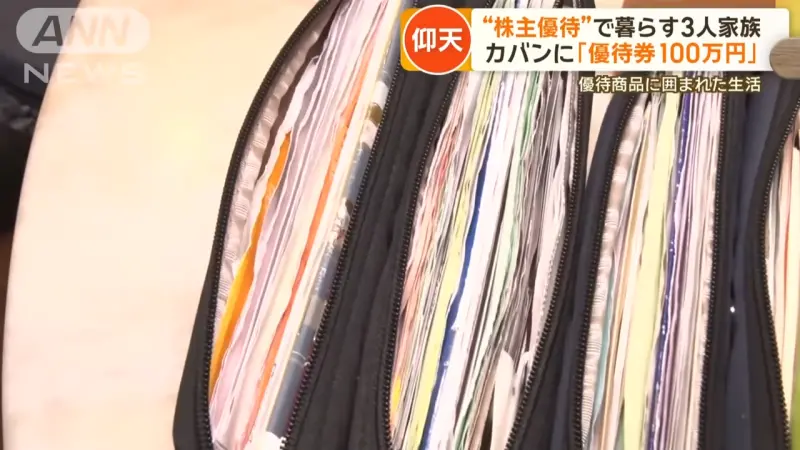

7年買710支股票不賣!1家3口靠「股東福利」活超爽:出門不用花錢

台灣進入股東大會旺季,各家公司開始發放股東會紀念品,例如六福(2705)會發放價值1199元門票給6萬名股東,中鋼(2002)則送出超實用的抗菌多功能不鏽鋼砧板組,總是引發廣大討論。而在日本,更有奇人

2024-04-23 16:56

-

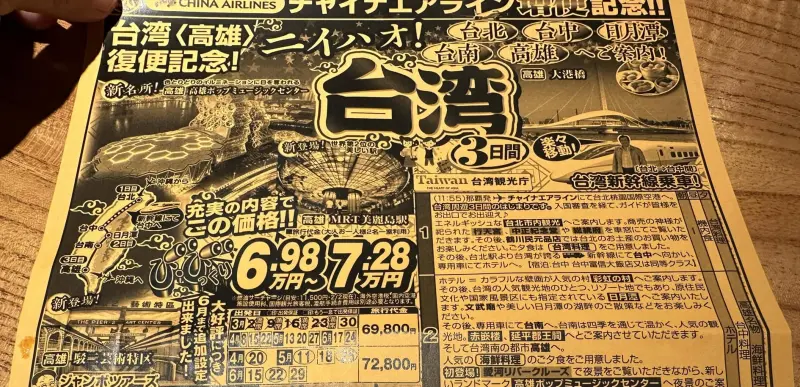

日本旅社推台灣3日遊!團費「不到1萬5」超俗 眾見完整行程嚇壞

這幾年台灣的國旅住宿價格備受關注,許多民眾皆抱怨「飛出國比在台灣玩便宜」,輿論持續發酵,也讓今年農曆春節連假時不少旅宿業者的訂房率慘澹。不過近期就有日本旅行社推出的台灣3天2夜的行程,團費竟然只要1萬

2024-04-23 16:09

-

統神YouTube頻道「經營6年失言遭刪」!火大發聲 申訴2次遭打槍

知名實況主「統神」張嘉航日前因為評論黃子佼爭議事件時,語出驚人講錯話,而引爆爭議,隨後不僅被多家遊戲廠商終止合作,經營6年的YouTube頻道更是直接被官方刪除。對此,統神22日晚間首度發文透露近況,

2024-04-23 08:28

娛樂

更多娛樂-

72歲張菲近照曝光!戴金錶看牙醫 何妤玟巧遇驚呼「體格壯碩」

綜藝大哥張菲(菲哥)2018年結束節目《綜藝菲常讚》主持工作後便淡出螢光幕,移居花蓮過著半退休生活,最近一次公開露面是去年3月替沈文程演唱會站台。女星何妤玟昨(23)日在粉專分享和張菲的親密合照,72

2024-04-24 08:05

-

許美靜演唱會挨轟划水 許常德逆風力挺「商演公司不專業」

49歲新加坡女歌手許美靜日前在中國南京舉辦「音樂見面會」,吸引不少粉絲購票入場,然而原定2個半小時的演出,實際演唱時間只有30分鐘,加上門票太貴,就連成名曲也是請伴唱老師代唱,讓不少粉絲非常不滿,紛紛

2024-04-23 22:26

-

ILLIT粉絲名2度爆爭議!扯上前輩NMIXX、Lisa 公司緊急重選滅火

韓國新人女團ILLIT於4月21日時,透過Weverse直播公開粉絲名,經由成員們共同決定的粉絲名是「LILLY(릴리)」,也是百合的英文單字,象徵百合花語中不變的愛。然而公開後引發不少爭議,有粉絲指

2024-04-23 21:17

-

一粒「魔性應援曲」16年前就出現!TWICE、NewJeans都曾公開致敬

中職台鋼雄鷹啦啦隊員「一粒」(趙宜莉)過去幾周,在李多慧、安芝儇、李晧禎、邊荷律等韓籍啦啦隊等人洗版之際橫空出世,短短1個月IG粉絲暴增百倍。一粒憑藉著超洗腦的「葉保弟應援曲」魔性舞步一炮而紅,吸引不

2024-04-23 20:35

運動

更多運動-

徐基麟挑戰陳義信、風神等強投大紀錄!也是中信兄弟的救命仙丹

中信兄弟先發投手徐基麟,18日對富邦悍將繳出6局無失分的優質先發,開季3戰就拿3勝,若他在今(24)日對戰樂天桃猿贏球,就可追上過去1992年陳義信、1998年卡洛索、2002年風神、2015年克蘭頓

2024-04-24 08:49

-

亞洲最長距離、最大鐵人三項賽事在台灣 全球優秀跑將齊聚爭雄

2024 CHALLENGE FAMILY國際鐵人三項競賽台灣站(以下簡稱CHALLENGE TAIWAN,CT)將於4月25日至28日於台東開展年度賽事,近萬名鐵人選手齊聚臺東熱力爆發,其中職業選手

2024-04-24 02:55

-

全中運游泳/建中學霸張暐堂奪生涯首金 奪冠關鍵:奧運金牌秘笈

113年全中運游泳今(23)日進入第4天賽程,成績表現平平並無人能刷新大會紀錄,但地主臺北市學校表現突出,在今頒出的16金中囊括了9金,臺北市建國中學、臺北市西松高中國中部和臺中市大里高中各摘下2金最

2024-04-24 02:13

-

全中運田徑/台灣超級新星誕生!謝元愷110公尺跨欄再破全國紀錄

113年全中運田徑賽第三天再傳佳績,高雄市鳳山商工謝元愷在高男組110公尺跨欄決賽,以13秒39改寫自己保持的U20全國紀錄。新北市明德高中林子婕在國女組100公尺跨欄決賽,以13秒80破大會,躍居U

2024-04-24 01:51

財經生活

更多財經生活-

只有3天!7-11美式買1送1、韓國泡麵半價 拿鐵、珍奶第2杯10元

7-11超值五六日咖啡優惠曝光!限時3天有精品美式買1送1,還有韓國炸醬拉麵也買1送1,相當於半價優惠,並且今(24)日門市還有燕麥系列飲品「第2杯10元」優惠倒數,包括燕麥拿鐵、黑糖珍珠燕麥奶都含在

2024-04-24 08:41

-

國家級警報慢了!「地震速報APP」爆紅 開發者是17歲高中生驚呆

0403花蓮地震過後,每天都發生大小不一的餘震至今,22日甚至出現連續地震、23日凌晨還有兩起芮氏規模6以上的餘震,讓許多民眾嚇到不敢睡,也讓地震通知APP有了「大量需求」,不少網友都上網請益推薦的地

2024-04-24 06:58

-

LINE手滑傳錯醜照「無法收回」!女嚇壞急道歉 眾人用好幾年不知

LINE已經成為台灣人日常當中,最常使用的社群軟體,不論是在公事或者聯繫親友都非常的方便。然而每當我們傳錯訊息或者錯框的時候,總是會習慣使用LINE的「收回功能」,然而近日卻有網友發現,LINE的「官

2024-04-24 05:56

-

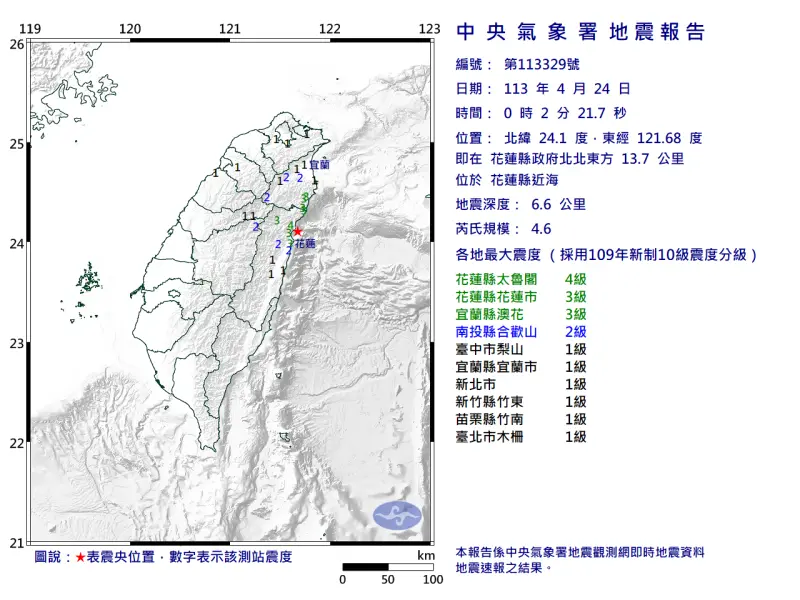

快訊/深夜又搖!00:02花蓮規模4.6極淺層地震 網哀怨:怎麼入睡

深夜地牛又醒了!今(24)日凌晨00:02,花蓮發生芮氏規模4.6地震,震央在花蓮縣政府北北東方 13.7 公里 ,位於花蓮縣近海,地震深度6.6公里,屬於極淺層地震。全台共有8個縣市有感搖晃。地震直

2024-04-24 00:10

全球

更多全球-

日本7月將換新鈔!日媒點出2大可能狀況 自動販賣機恐無法使用

日本將在7月3號正式發行新紙鈔,面額一萬、五千、一千將會改版,但舊鈔仍可持續使用。新鈔發行後將面臨哪些問題?日媒近日提到,可能將更加推進無現金交易的比例,同時,在街頭常看到的自動販賣機,也將面臨無法吞

2024-04-24 08:25

-

美股四大指數勁揚!特斯拉財報慘澹 盤後股價卻強漲10%

美股週二(23日)收盤再度收紅,四大指數紛紛勁揚,本週適逢美國企業的超級財報週登場,美股科技七雄有四家將在本周陸續公布,率先於盤後公佈財報的特斯拉狀況如預期的慘澹,但盤後股價仍硬聲漲了10%。許多大型

2024-04-24 06:54

-

聲音「AI化」算侵權?中國首例「AI聲音侵權」一審宣判配音員勝訴

人工智慧(AI)熱潮席捲全球,不少企業相繼推出AI相關業務,以減少人力支出,但在訓練AI期間可能產生的侵權爭議也開始出現。中國週二也審理了首樁「AI聲音侵權訴訟案」,作為配音師的原告稱自己的「聲音」在

2024-04-24 00:19

-

華為迎頭趕上!蘋果在中國市占率降至15.7% 華為銷量成長7成

根據市場調查機構「Counterpoint」最新數據,蘋果(Apple)公司2024年第一季在中國市場出貨量,比起去年同期銳減至15.7%,創下2020年疫情爆發以後最糟糕的單季成績。相較之下中國本土

2024-04-23 23:33